iOS App Development

Introduction

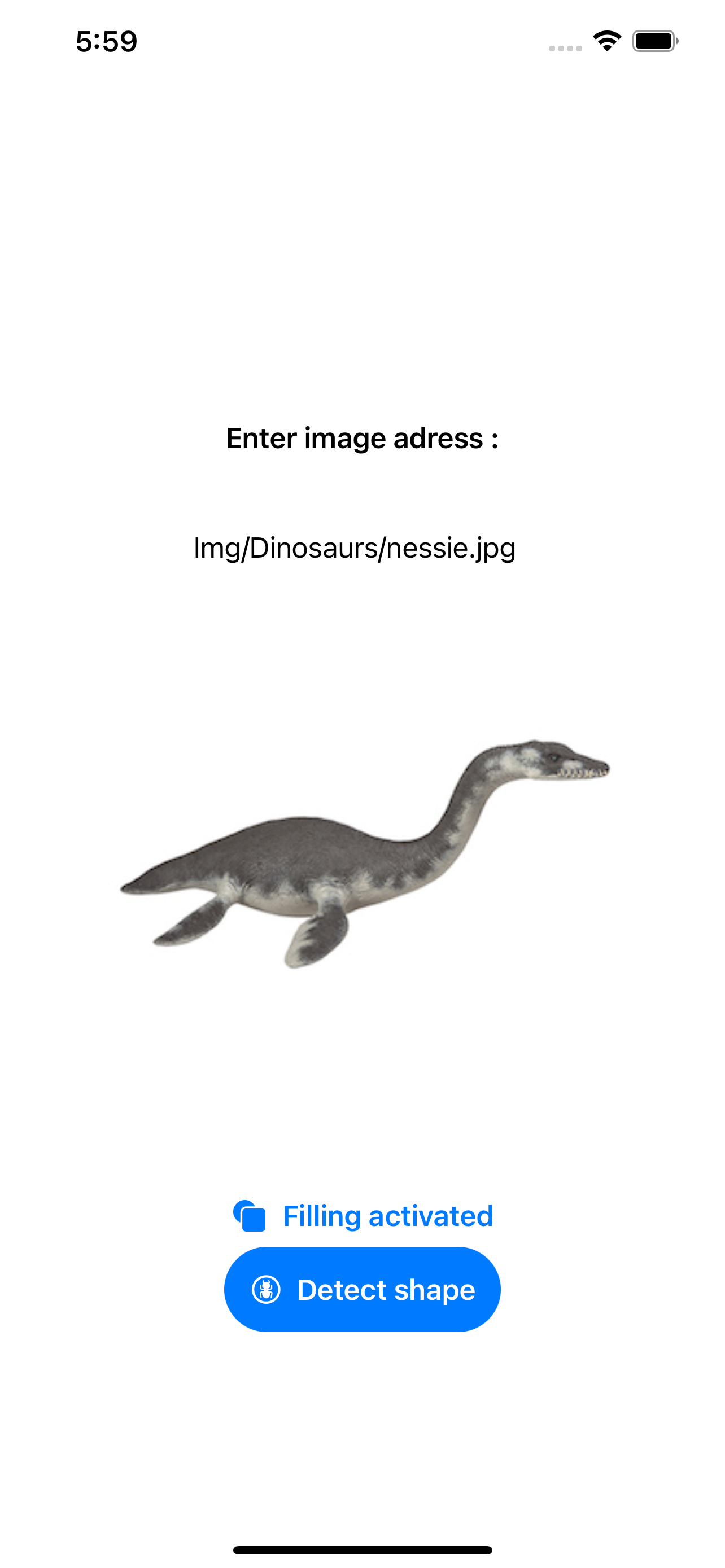

I did an internship with a self-employed developer of iOS applications, Good Life App, during the summer of 2020. My mission was to help him improving his LogoMaker¨ application with a lot of templates. I had to find a way to create vectorial images for these templates from given images (i.e lists of coordinates from the outline of the shapes in the initial images). To do this, I firstly worked on AI models provided by the CoreML framework, and secondly directly on pixel matrices to find the foreground shapes. I also had to find a way to generate backgrounds that will be used on the logosutline of a shape in an initial image)

Using CoreML

My first step was to ask myself "How can we recognize a shape in an image?". Artificial intelligence seemed to be the best way to do it; I had studied small and basics algorithms, but nothing of this magnitude, so I had to learn a bit more about image recognition algorithms. Two of them seemed to me to fit my mission: detection and segmentation; but with more researches, I finally focused on image segmentation, which seemed to be more adapted. I found a model called "DeepLab" on the CoreML Models webpage on the Apple Developers website and tried to implement it on a new application.

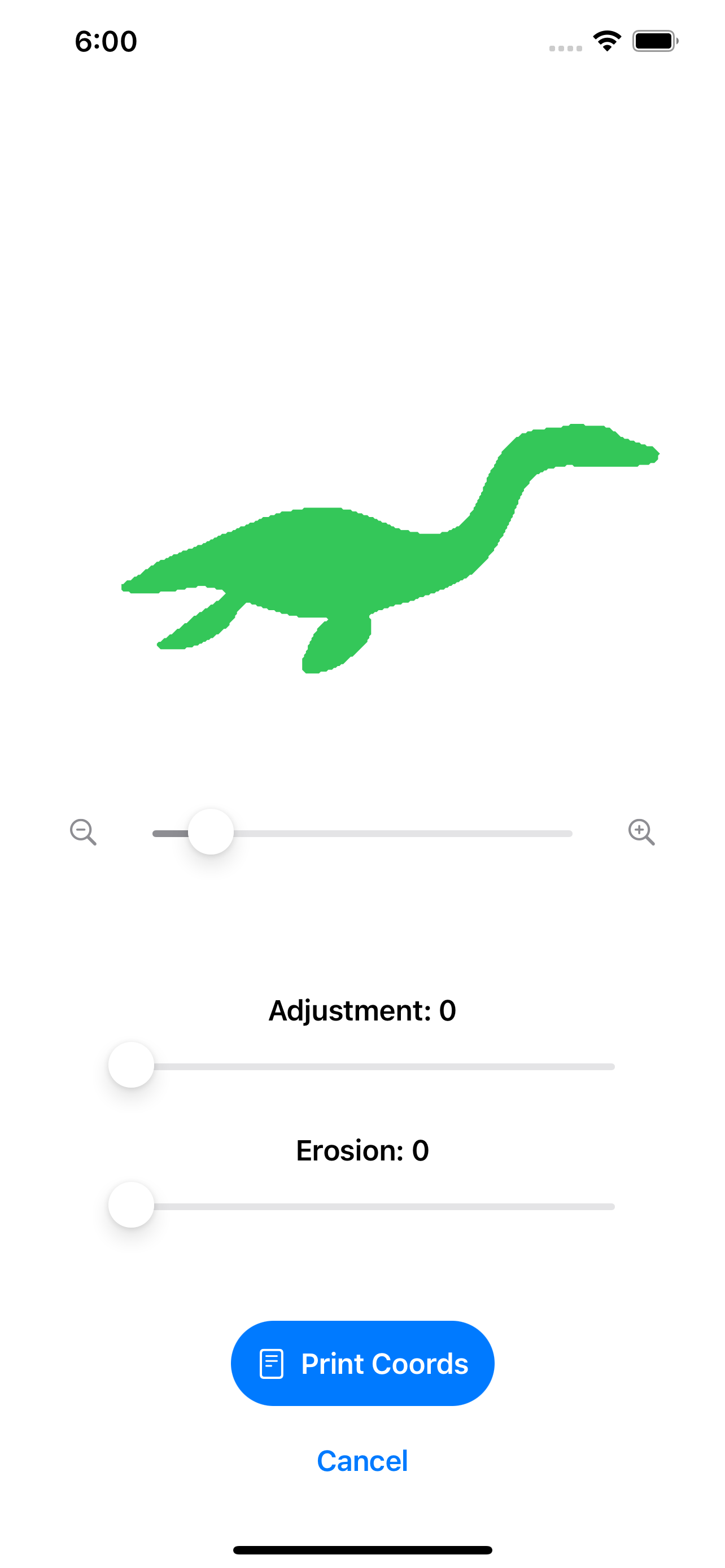

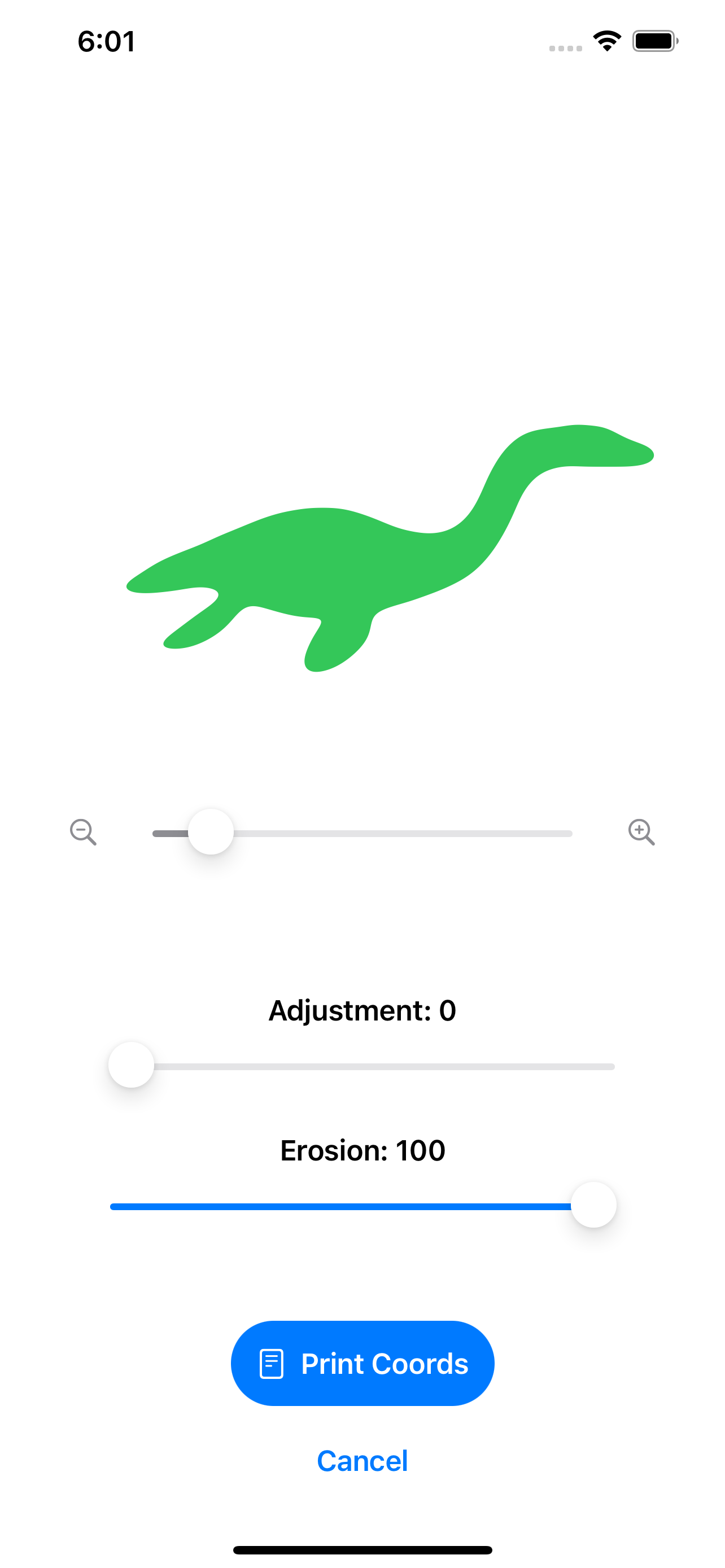

The DeepLab model takes a 513x513 pixels image and gives as output a 513x513 matrix of IDs. My idea was to create a new matrix from this one, with 0s (the pixel is not part of the shape) and 1s (the pixel is part of the shape) as content, and to only keep the 1s with at most 3 direct neighbors (no diagonal), because a 1 with 4 neighbors is part of the interior of the shape. This gave me a list of the coordinates of the outline that I sorted with basic geometrical distance calculation. But the image was a little bit "pixelized", so I added a "contour smoothing" algorithm: each point in the coordinates list take the mean coordinates between it and the next point. I let the possibility of applying this algorithm 100 times, to let the choice in smoothing intensity.

Segmentation with CoreML: original, filled outline and smoothed filled outline

The fact is this AI model had sometimes some failures and added part of the background in the list of the shape coordinates. I discussed it with my internship tutor, and we decided to work differently, pixel by pixel on white-background images.

Working on pixel matrices

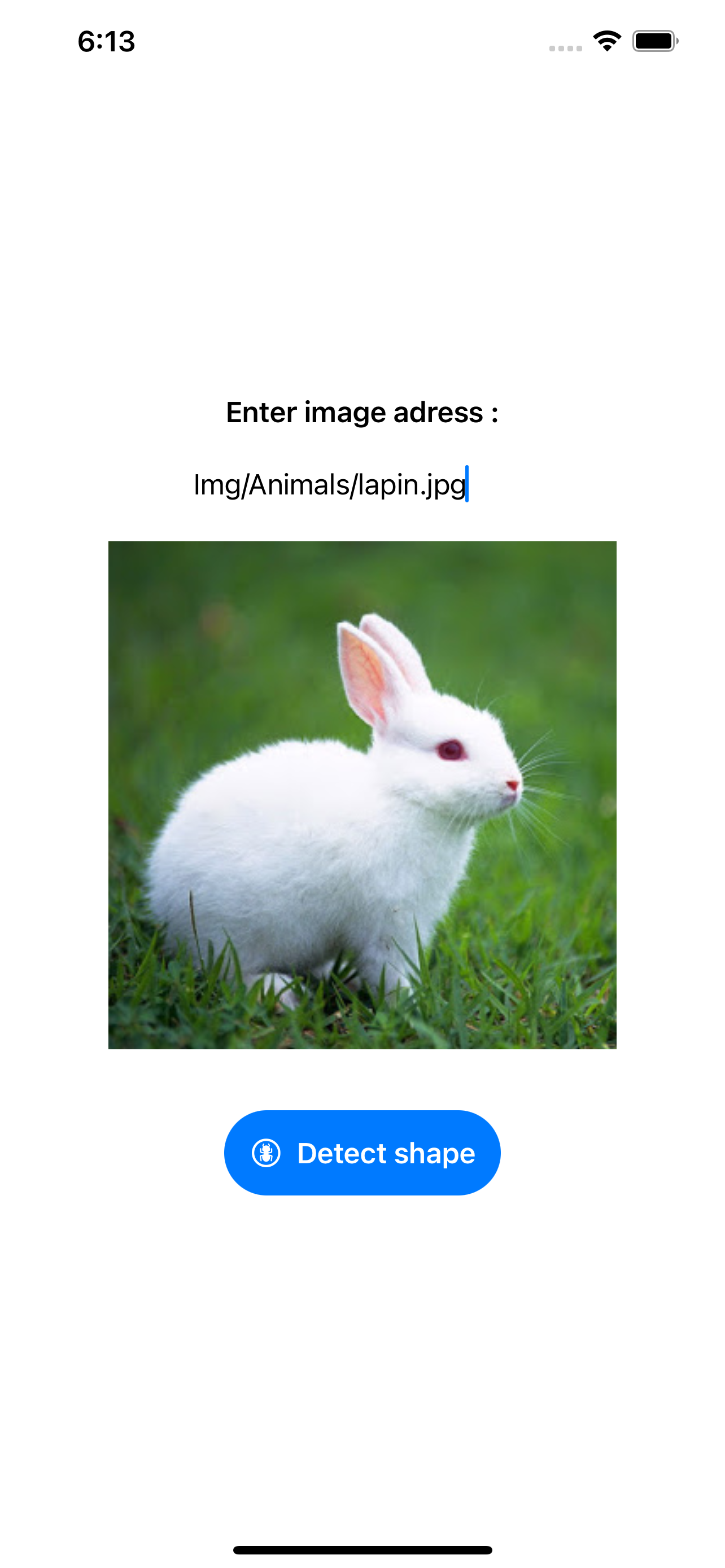

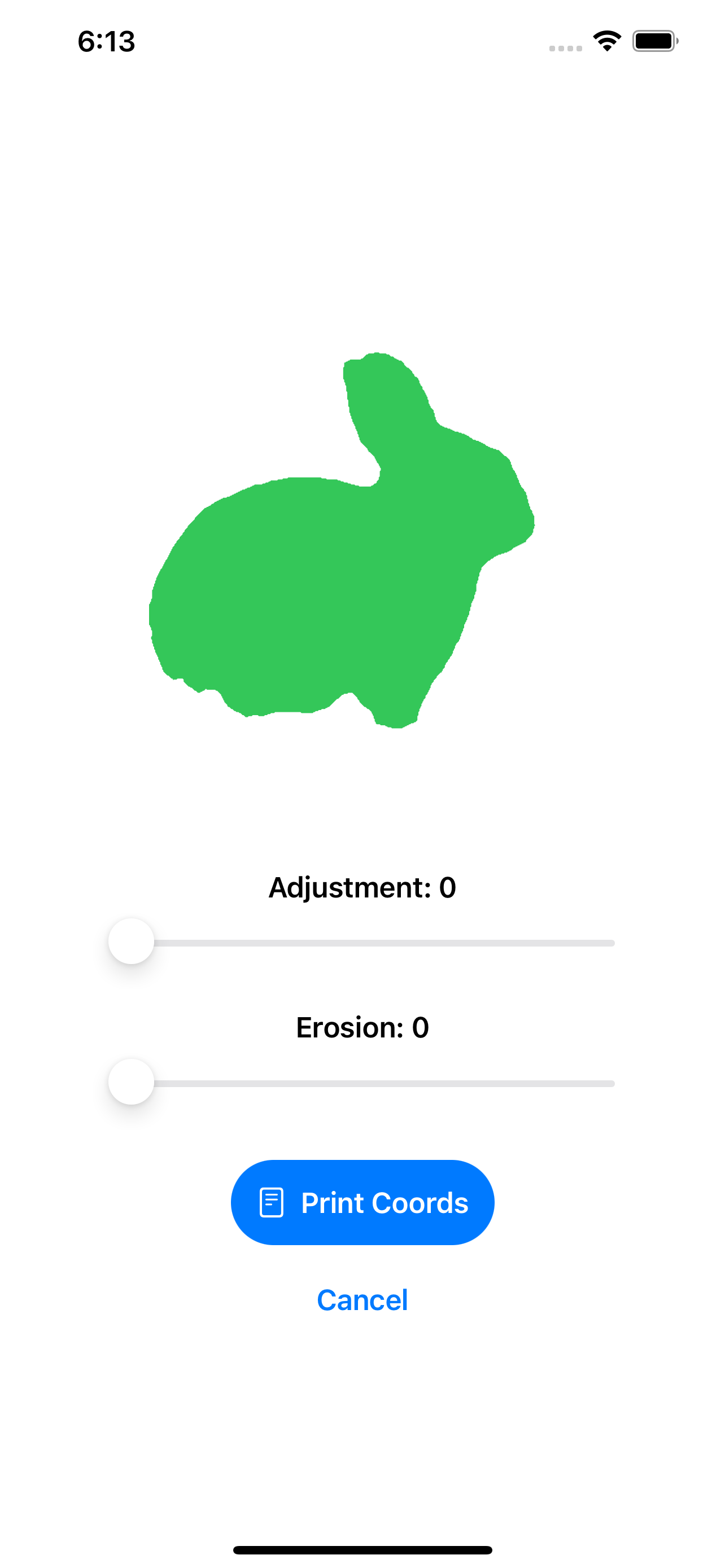

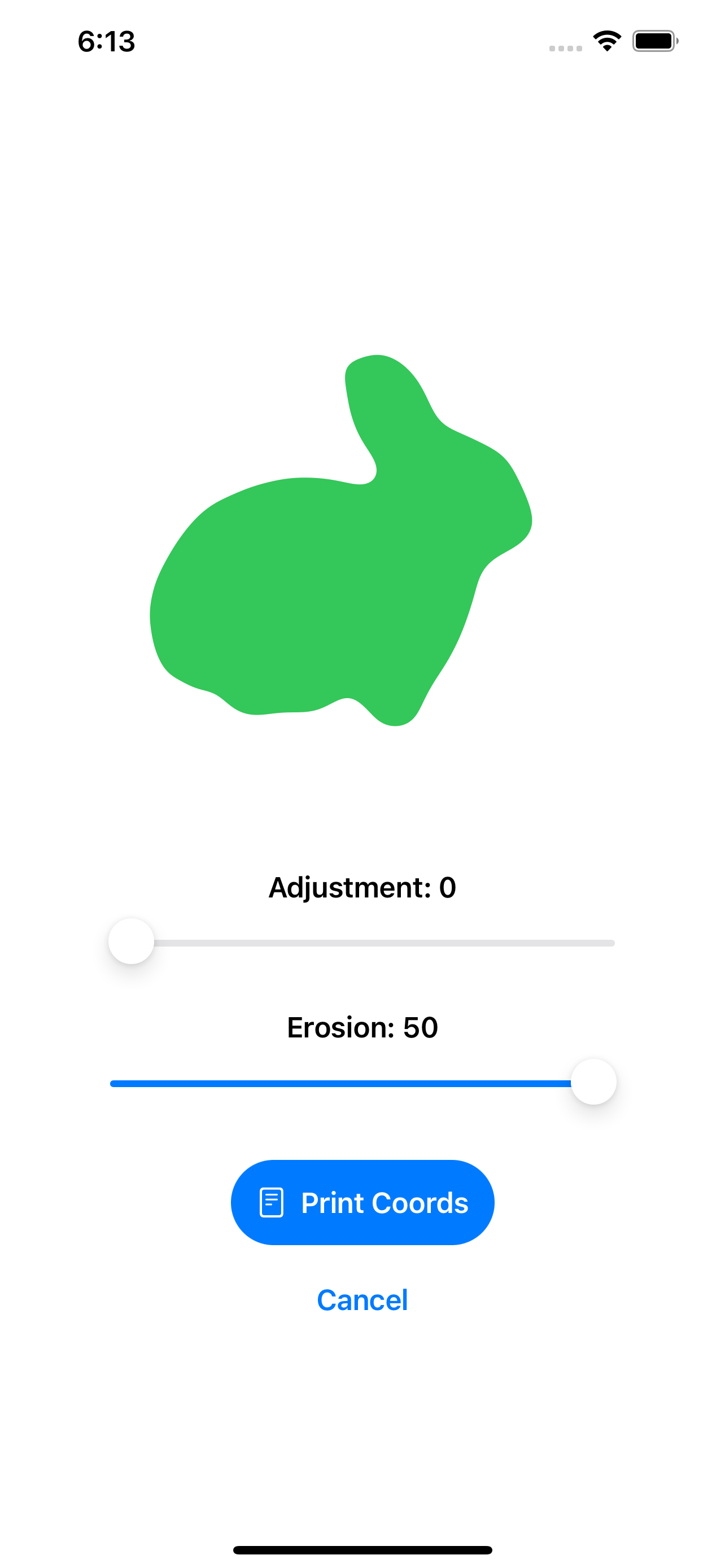

As we decided to focus on white-background images, I adapted my algorithm to output an RGB values matrix instead of a pixel IDs matrix. I defined that each pixel of RGB value with Red in [240;255], Green in [240;255], and Blue in [240;255] was considered as background. The rest of the process was the same: create a matrix of 0s (background) and 1s (foreground), keep the 1s with at most 3 neighbors, get a coordinate list, and sort it.

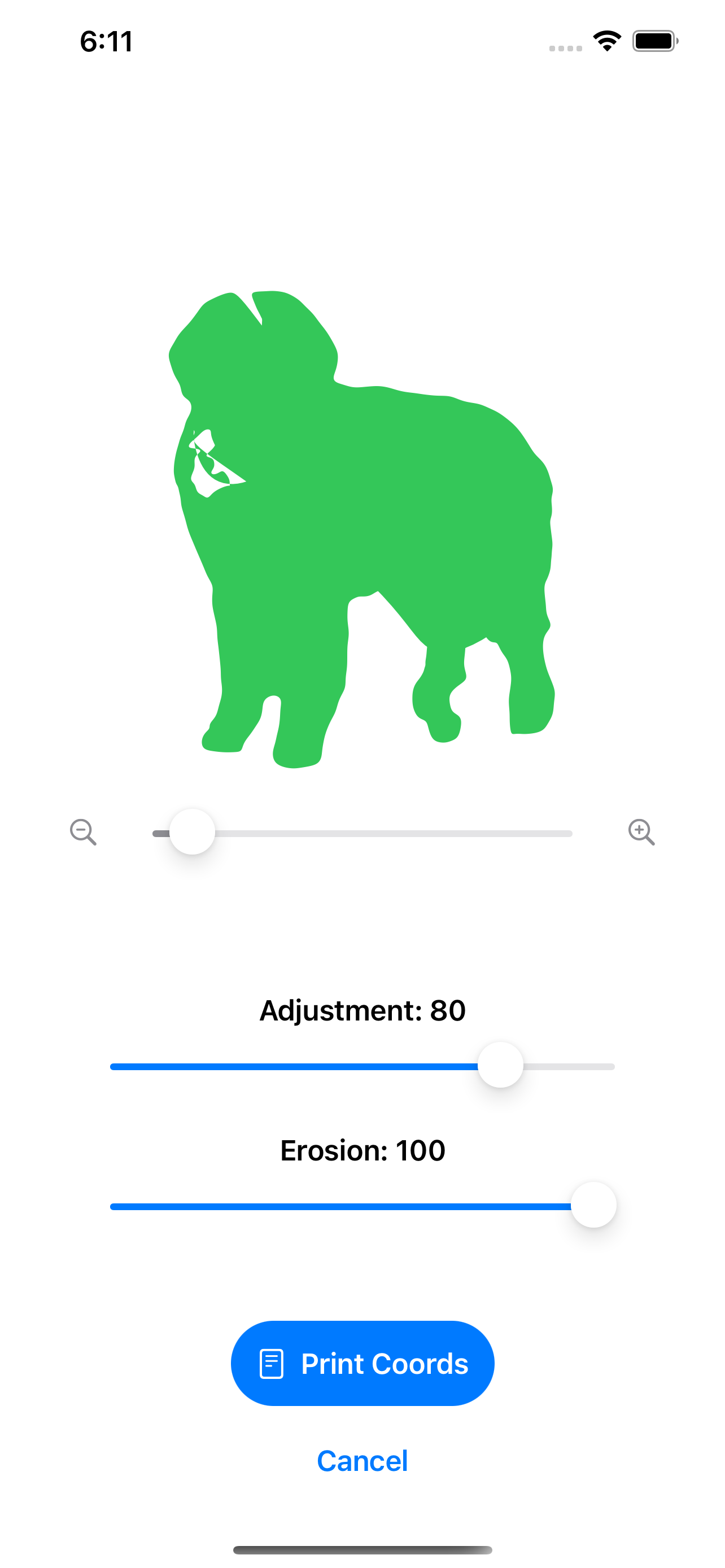

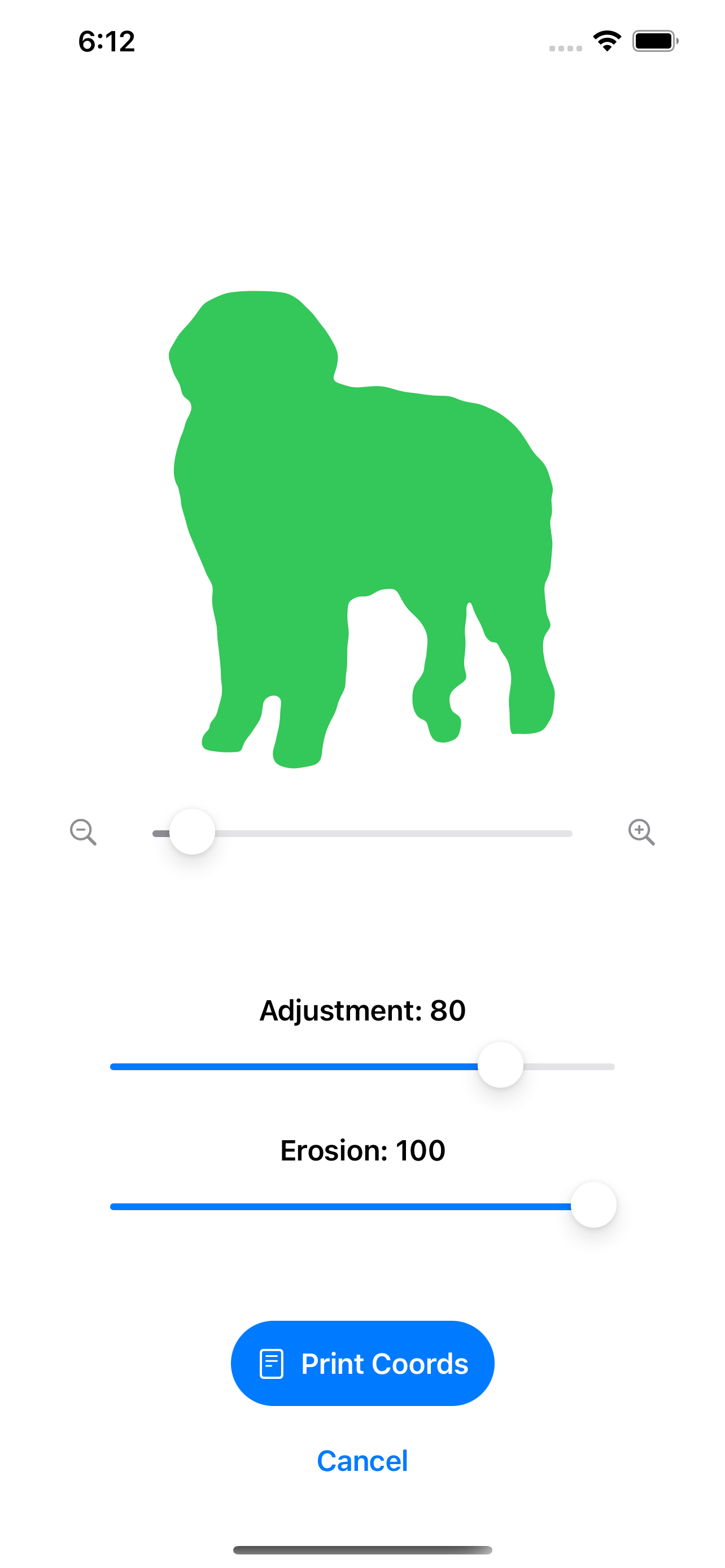

Working on pixels: original, filled outline and eroded filled outline

But what if the foreground shape had some white in? The algorithm would associate it with the background, creating holes in the shape. I had this problem with some images, so I added an effective but with high complexity matrix completion method to complete the hole in the shape: in the 0s and 1s matrix. The idea was simple: recursively, each 0 in the shape with a left-neighbor equal to 1 and top-neighbor equal to 1 takes the value 1.

Problematic image: original, without matrix completion method and with matrix completion method

This algorithm was maybe more precise than the one with image segmentation, but it only worked on white-background images, and due to its high complexity it took longer to compile.